Key Takeaways

- Companies are publishing millions of AI-generated articles in an attempt to drive traffic from Google Search and answer engines such as ChatGPT. Many case studies claim that this is an effective strategy. In a separate study, we show that there are now more AI-generated articles being published on the web than human-written articles.

- In this study, we show that, despite the ubiquity of AI-generated content, it does not perform well in search and answer engines:

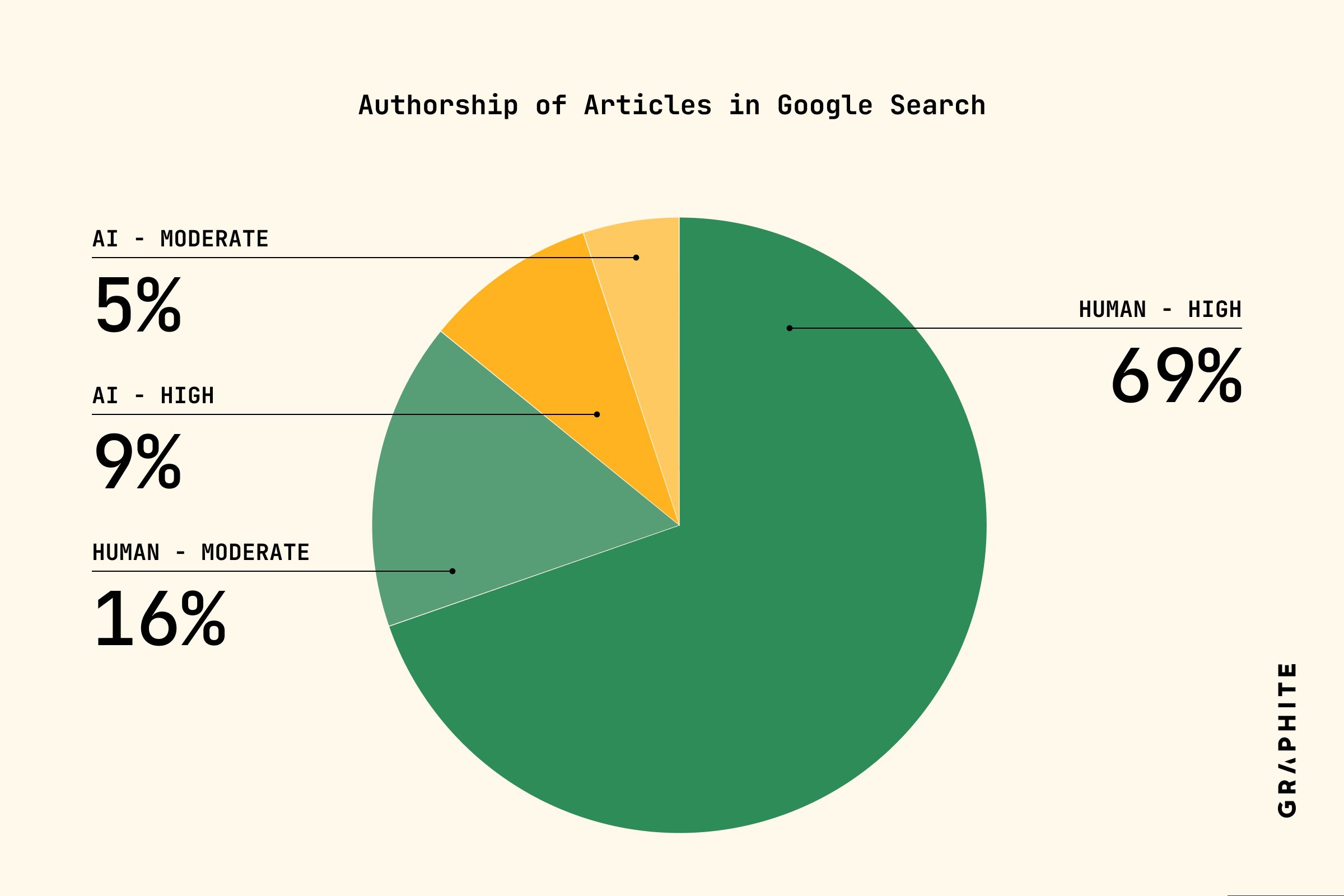

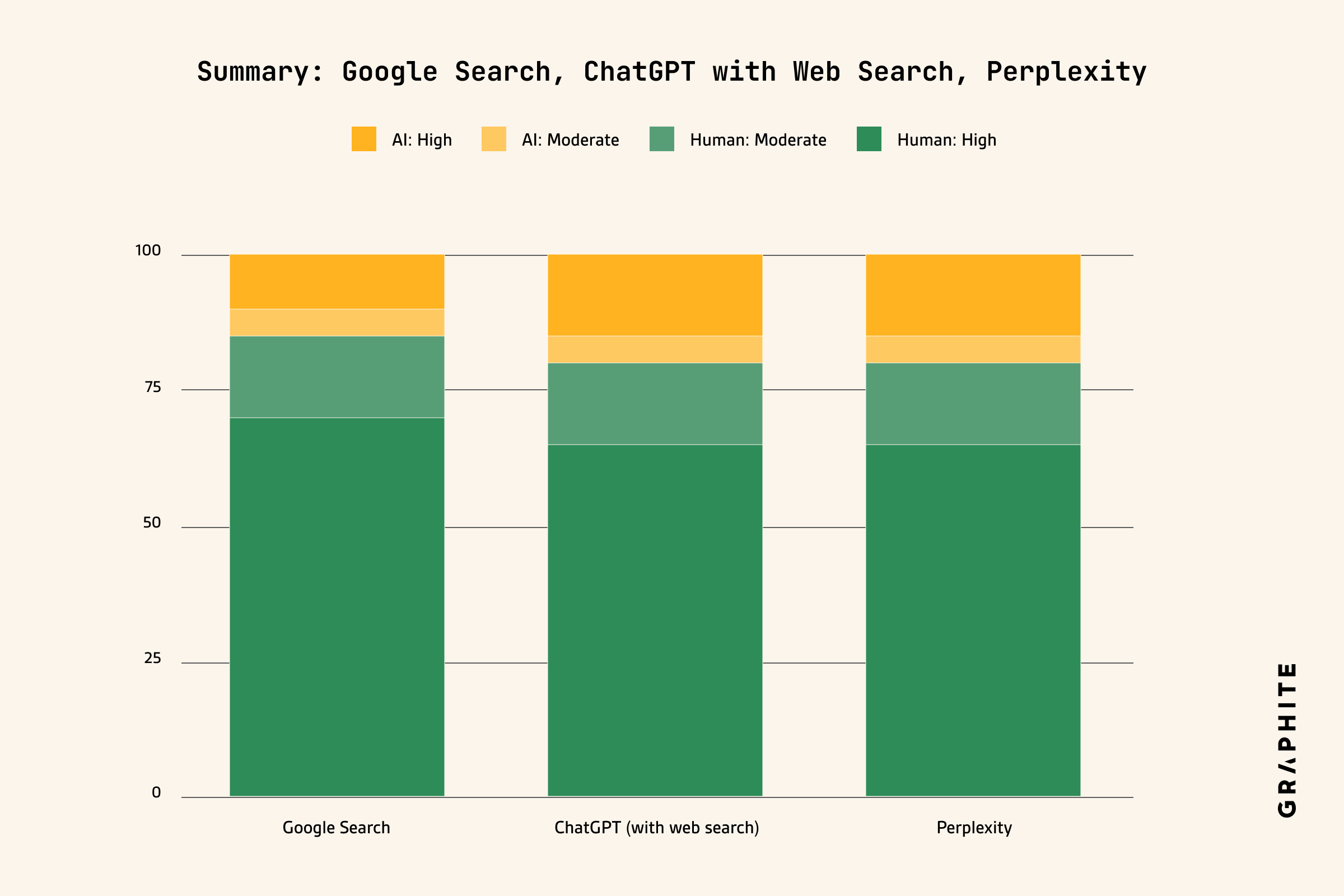

- 86% of articles ranking in Google Search are written by humans, and only 14% are generated using AI.

- 82% of articles cited by ChatGPT & Perplexity are written by humans, and only 18% are generated using AI.

- When AI-generated articles do appear in Google Search, they tend to rank lower than human-written articles.

- We did not evaluate the effectiveness of AI-assisted content with heavy human editing, and we believe this may be an effective strategy.

Motivation

Since ChatGPT launched in November 2022, many companies have published content generated by LLMs such as GPT-4, Claude, and Gemini to grow traffic from Google Search. The goal is to rank for popular searches with high-quality content at a minimal cost, rather than spending hundreds of dollars on human writing. Numerous articles and case studies have been published claiming that this is an effective strategy.

Traffic from answer engines like ChatGPT to websites has increased significantly since January 2025. Therefore, companies are now also exploring the use of AI-generated content to increase traffic from answer engines.

AI-generated content is becoming ubiquitous. In a separate study, we found that the number of AI-generated articles published online in November 2024 exceeded the number of human-written articles.

There is reason to believe AI-generated content could be effective. The quality of AI-generated content is rapidly improving, and in many cases, it is as good as, or even better than, content written by humans (MIT study). It is often hard for humans to distinguish whether content is AI-generated or human-written (Originality AI Post).

However, in May 2024, we conducted a rigorous analysis that contradicts the prevailing narrative that AI-generated content is an effective strategy for growing traffic from Google Search. We found that only 12% of articles in Google Search were generated by AI, and that human-written articles tended to outrank AI-generated articles.

In this study, we reevaluate the effectiveness of AI-generated articles in search and answer engines. Has the proportion of AI-generated articles in Google Search changed? How often do ChatGPT and Perplexity cite AI-generated content?

Results

Prevalence Of AI-Generated Articles

We find that although there are now more AI-generated articles than human-written articles being published on the web (study), the vast majority of articles in Google Search, ChatGPT, and Perplexity are written by humans, not generated by AI.

Google Search: 86% of articles are written by humans, and only 14% are generated with AI.

In the pie chart below, we classify the articles into four distinct buckets. We provide the details in the Methodology section. (14% is the sum of the “AI-generated: high confidence” and “AI-generated: moderate confidence” buckets.)

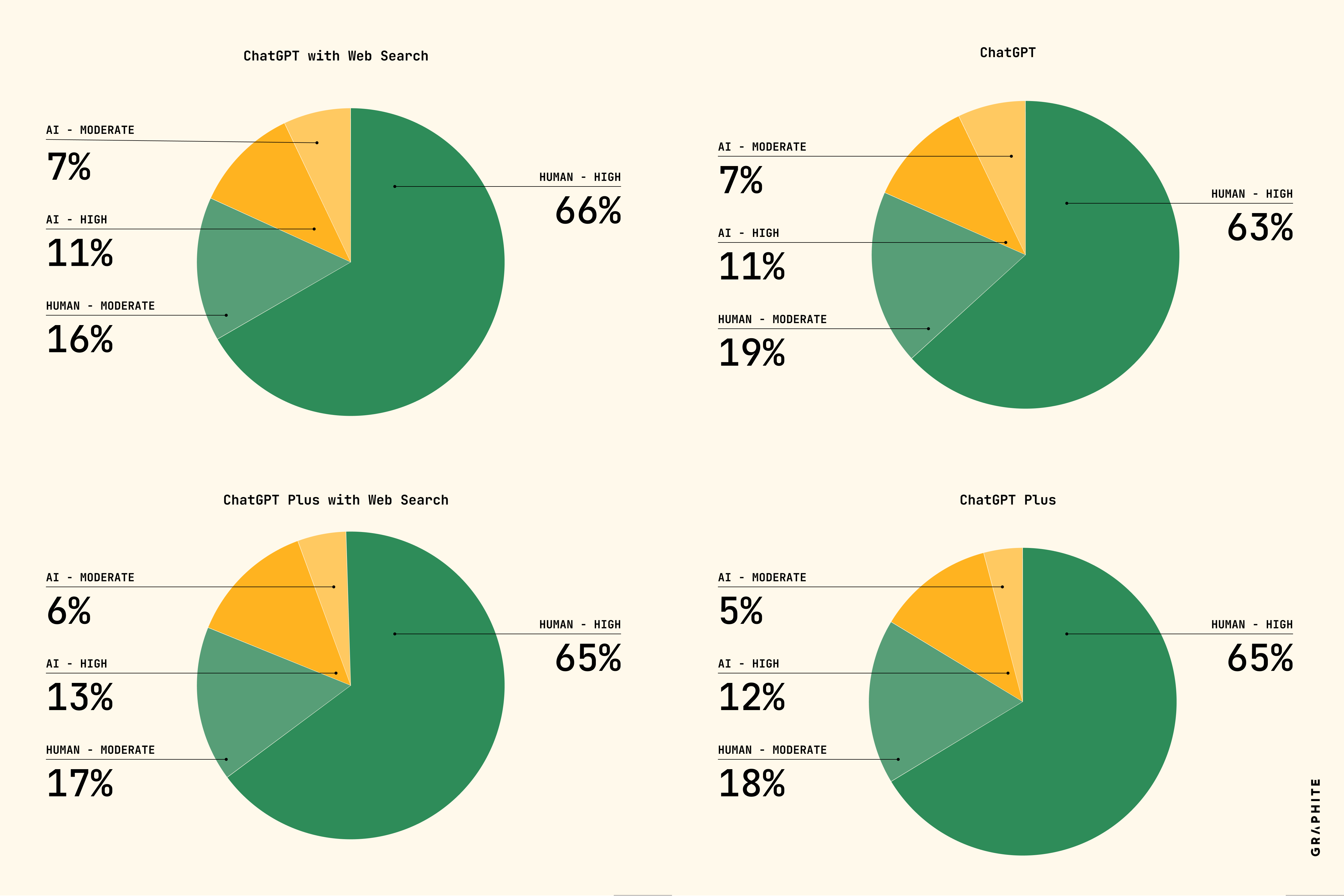

ChatGPT: 82% of cited articles are written by humans, and only 18% of cited articles are generated using AI (using ChatGPT with web search).

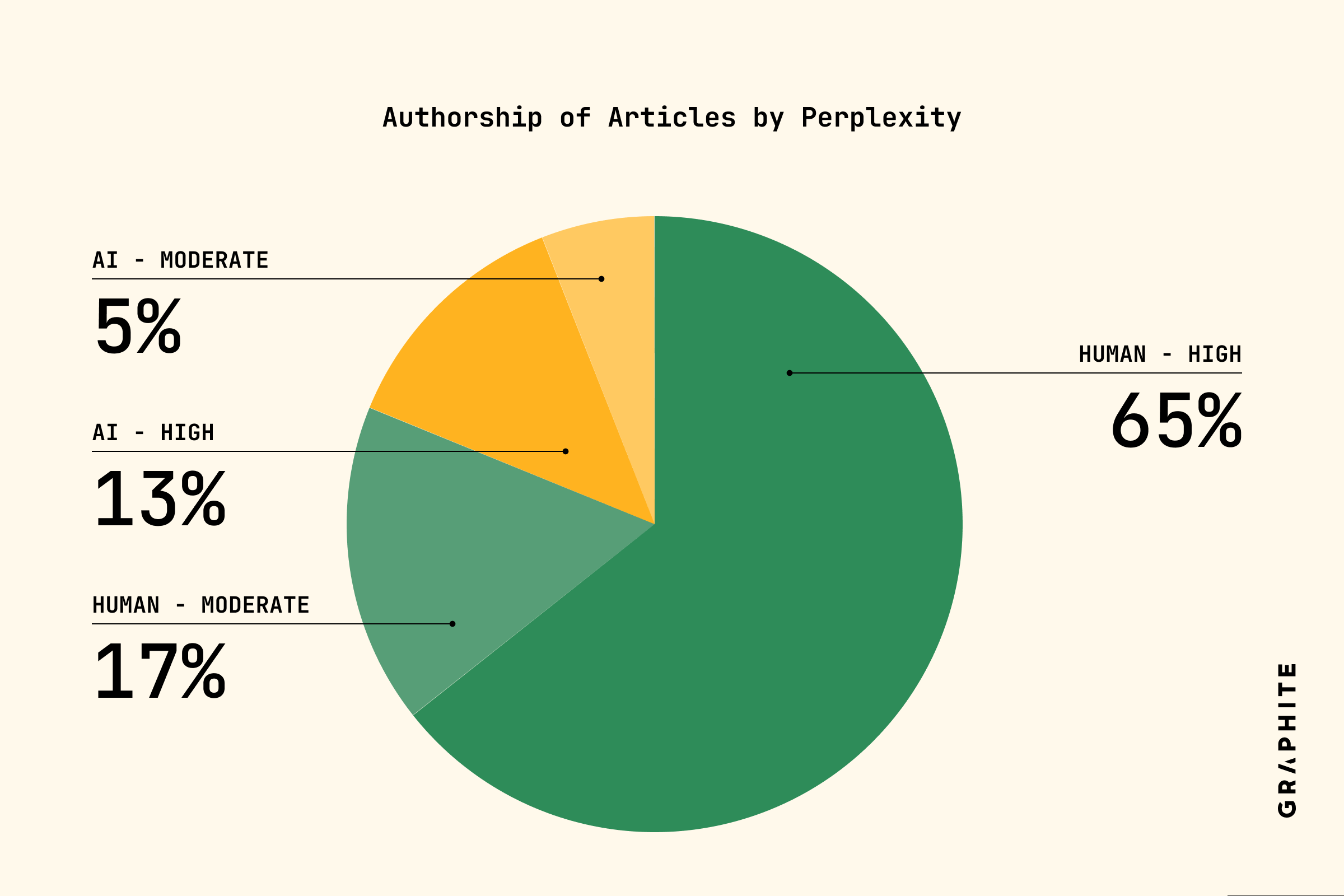

Perplexity: 82% of cited articles are written by humans, and only 18% are generated using AI.

AI-generated Articles in Google Search: 2025 vs. 2024

In our 2024 study, we found that 12% of articles in Google Search were AI-generated, compared to 14% in 2025. These percentages are not directly comparable because they are computed using different AI detectors (Surfer rather than Originality.ai). However, we hypothesize that this is not a meaningful difference, as we found that the proportion of AI-generated articles plateaued in May 2024 (study).

Ranking of AI-generated Articles in Google Search

What happens when AI-generated articles do appear in Google Search? How well do they rank relative to human-written articles?

It is worth noting that ranking depends on many latent variables, and isolating the effect of a particular variable on ranking is challenging. With that caveat in mind, we proceed with the following analysis.

Note that if the use of AI did not affect ranking, then we would expect approximately 14% of all articles to be AI-generated at each position. However, we observe a smaller percentage of AI-generated articles near the top of the SERP, especially in the top three positions, and a higher proportion of AI-generated articles after the tenth position. For example, only 7% of the articles ranking number one are AI-generated.

We also provide a second test. First, we identify keywords that appear on both AI-generated and human-written pages. Then, for each keyword, we randomly select one AI-generated and one human-written page. If the way the article was produced had no effect on rank, we would expect the average position of the two pages to be similar. Instead, we find that the human-written pages rank higher (closer to the top of the page), and this difference is statistically significant under a Wilcoxon Signed-Rank Test with p < 1e-6.

In summary, when AI-generated articles appear in Google Search, they tend to rank lower than human-written articles.

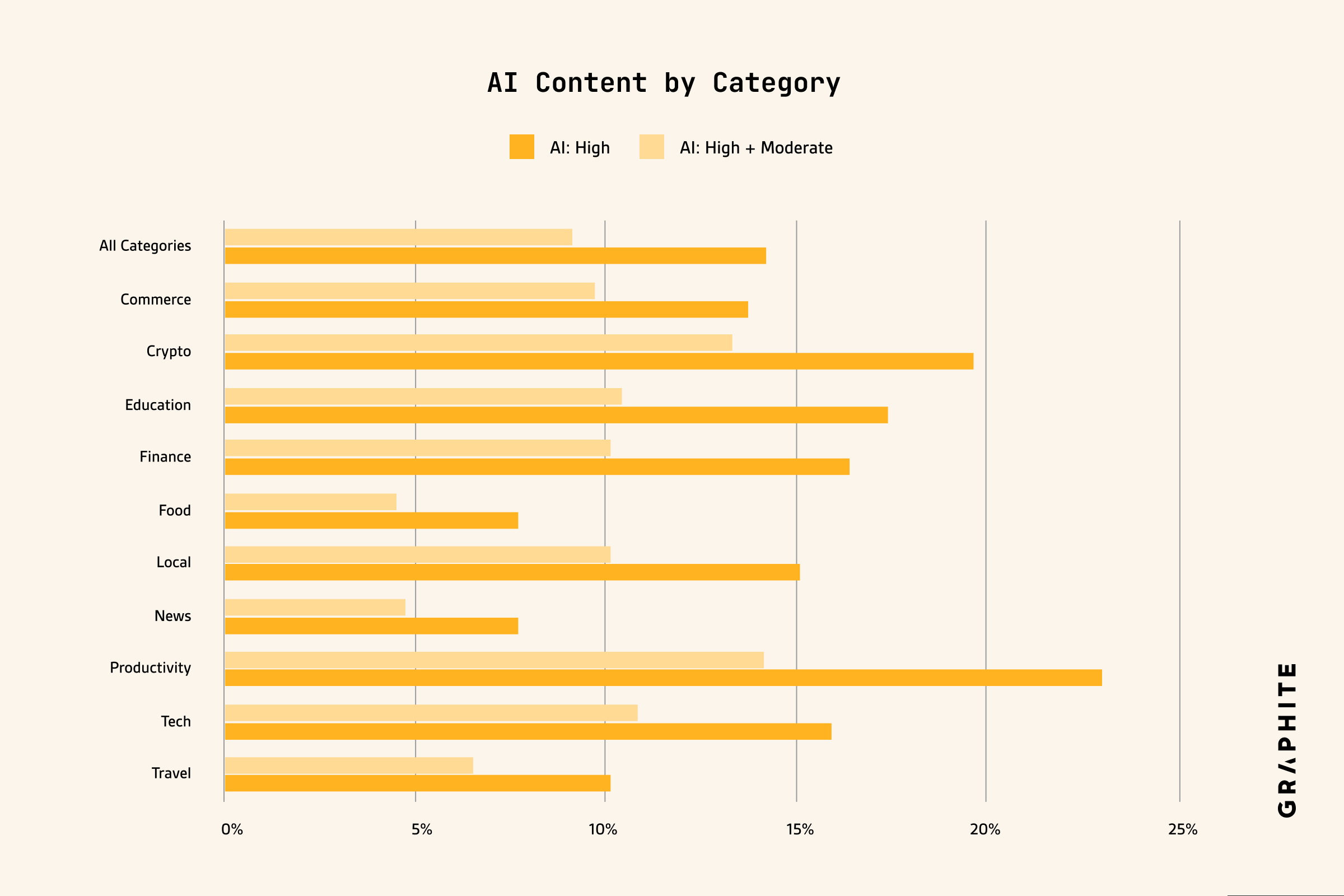

Prevalence Of AI-Generated Articles by Category

Finally, we report the % of AI-generated articles in Google Search in different categories.

Some categories, like Food, contain very little AI-generated content. The categories with the fewest “AI: high confidence” articles are Food (4.5%), News (4.7%), and Travel (6.5%). The categories with the most “AI: high confidence” articles are Productivity (14.1%), Crypto (13.3%), Tech (10.8%), and Education (10.4%).

Methodology

Our methodology is similar to what we described in our 2024 report.

We use a larger set of keywords for this study, a total of 31,493 across 10 categories.

For each keyword, we collected the first two Google SERPs in June 2025. From each SERP, we select articles and listicles (according to Graphite’s page type classifier) written in English.

To get citations from answer engines, we sample 100 keywords per category, and translate them into questions with the same intent using an LLM. For ChatGPT, we ask the questions manually in ChatGPT in the browser, and extract the citations. We gather data with ChatGPT and ChatGPT Plus (the paid version), and vary whether or not we click the “Web Search” button to trigger the use of search, resulting in four datasets. For Perplexity, we gather citation data using their API. For both ChatGPT and Perplexity we select citations that are articles and listicles (according to Graphite’s page type classifier), and written in English.

To classify each article, we classify 500-word chunks using Surfer’s AI detector (rather than Originality.ai), and then aggregate those chunk classifications, weighted by the chunk length, to get an article-level classification. In particular, we categorize articles as follows:

Human: high confidence: >90% human

Human: moderate confidence: > 50%, < 90% human

AI: moderate confidence: >50%, < 90% AI

AI: high confidence: > 90% AI

Note: We evaluate the accuracy of Surfer’s AI detector in another study, and find a false positive rate of 4.2% and a false negative rate of 0.6%.

Discussion

AI-Assisted Content

Rather than fully AI-generating content, another strategy is to generate a first draft, then have a human in the loop to edit or rewrite it. Our research did not evaluate the prevalence of content created using this strategy, and we believe it may be more effective in search and answer engines.

Conflicting Data & Case Studies

While our research shows that content generated by AI does not perform well in Google Search or LLMs, we encourage readers to share data that shows otherwise. We also encourage readers to share data that supports our findings.